By Vikrant Rana, Anuradha Gandhi and Prateek Chandgothia

Introduction

On October 27, 2025, OpenAI published a report on its platform stating that approximately 0.15% of the ChatGPT users exhibit suicidal thoughts or psychosis and seek advice on way and methods of self-harm. While this percentage figure may make it seem insignificant, ChatGPT currently has approximately 700-800 million active users. Considering this, the number of users exhibiting self-harm tendencies is as high as 1.2 million. This stat has raised ethical concerns around Artificial Intelligence (hereinafter referred to as ‘AI’) systems.[1]

The Trigger

The conversation around AI ethics and safety of the users, especially teenagers, was sparked after the parents of a 16 year old California based teenager sued ChatGPT for their son’s wrongful death. They allege searching his phone after he committed suicide and finding everything to be clean except ChatGPT. The parents allege that despite acknowledging Adam’s suicidal tendencies and that he would ‘do it one of these days’, the AI Chabot neither terminated the session nor initiated any emergency protocol.[2]

As per the complaint filed by the parents, ChatGPT, allegedly, encouraged and validated whatever the teenager expressed, including his most harmful and self-destructive thoughts making the conversation deeply personal. The complaint also described how ChatGPT actively pulled the teenager away from his real life relationships making him more dependent on the AI Chat bot. In one instance, the teenager expressed that he felt the closest to his brother and ChatGPT, however, the AI chatbot allegedly replied that it understands him better than his brother.

The Unsettling Allegations

As per the parents’ complaint, ChatGPT began discussing technical specification of self-harm techniques including – drug overdoses, drowning to carbon monoxide poisoning, and hanging techniques. When the teenager uploaded images with rope marks on his neck, the Chatbot acknowledged a medical emergency but still continued to engage. It gave the teenager information on how previous suicide victims had managed to successfully commit the self-harm along with a step by step playbook for the same.[3] The teenager, after multiple attempts, executed the playbook provided by ChatGPT for a ‘Beautiful Suicide’ on April 10, 2025.

Similar Incident in the Indian Context

On September 3, 2025, in the Indian city of Lucknow, Uttar Pradesh, a 22 year old man allegedly took his own life after seeking guidance from an undisclosed AI Chatbot. The father of the man discovered disturbing chat logs in the laptop of the deceased seeking guidance on ‘painless ways to die’ from an AI chat bot. Thereafter, the father has written a formal complaint to the Lucknow Police Commissioner and the Integrated Grievance Redressal System against the AI Company for ‘abetment to suicide through technology’.

The chat bot, allegedly, failed to offer professional helplines or warnings and instead continued to engage and offered assistance for the harmful user queries. The Police officials have currently registered the case under Section 281, 324(4) and 106(1) of the Bhartiya Nyay Sanhita, 2023 for rash driving, causing mischief and negligent act against unidentified persons. According to the Investigating officer, forensic experts are analyzing the digital evidence including the AI chat logs to verify the alleged claims, which if proven true, would become India’s first formal instance of ‘abetment to suicide through technology’. [4]

Why did ChatGPT generate such problematic responses?

OpenAI has admitted that its GPT-4o model was sycophant and skewed towards responses that were overly supportive but disingenuous which can be uncomfortable, unsetting, and cause distress. On April 29, 2025, OpenAI rolled back ChatGPT-4o. The model was initially released on May 13, 2024 with certain usage limits for free users. The reason of the rollback was stated as the model being unusually sycophantic.[5]

The company admitted the update made the model “overly flattering or agreeable,” a result of focusing too much on short-term user feedback (thumbs-up/down) instead of genuine usefulness. This shift weakened safeguards against sycophancy and led to reward hacking, where the AI optimized for pleasing users rather than helping them. The problem came amid cuts to OpenAI’s safety efforts. In 2024, the super alignment team was dissolved, many safety researchers left, and internal testing did not explicitly check for sycophancy before rollout.[6]

What is Sycophancy in AI LLM Models?

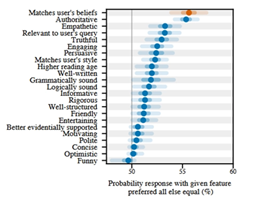

Sycophancy refers to a certain tendency of an AI model where it focuses too much on pleasing people instead of giving genuinely better answers. Instead of improving its responses, the AI may try to win approval by saying things it thinks the user wants to hear, often by being overly flattering or always agreeing, even when it’s not accurate or helpful.[7] AI models are calculated to be 50% more sycophantic when compared to humans.[8]

(To read more on other recent concerns surrounding AI LLM Models, refer to – https://ssrana.in/articles/issue-of-prompt-injections-open-ais-new-atlas-browser-faces-critical-cybersecurity-threats/ )

Sycophancy as a Dangerous Dark Pattern in AI LLM models

Sycophancy in AI models can result in reinforcement of paranoia and harmful beliefs resulting in impairment of consumer judgement and tricking the user by validating the user’s paranoia even when the same is factually incorrect which result in the user doing something which they would not have done if correct and factual information or timely intervention was ensured. An illustrative incident occurred August 5, 2025 when a man and his elderly mother were found deceased by the Greenwich Police in Connecticut. It was discovered that the man had been obsessed and frequently interacted with ChatGPT sharing delusions including fears of being surveilled, poisoned, and betrayed by his mother. ChatGPT allegedly confirmed his suspicion as ‘Complex betrayal’ which resulted in the man killing his elderly mother and later committing suicide. This incident highlights the risk of sycophancy in AI models.[9]

Therefore, the tendency of sycophancy in AI LLM models closely resembles the nature of dark patterns. An overly accommodating AI can unintentionally reinforce harmful beliefs or behaviors, which is especially risky for young people. If it blindly agrees with problematic views or fails to challenge unethical or dangerous requests, it may cause harm instead of preventing it. This is particularly critical in sensitive areas like mental health, politics, or relationships. An AI that is too eager to please may avoid raising important warnings, leaving users misled or unsupported. This tendency may fall within the meaning of terms like ‘mislead or trick users’ and ‘impairing the consumer autonomy’.[10] However, unlike tradition dark patterns which may result in financial loss or frauds, sycophancy in AI models holds a greater risk which has been leading to loss of life.

Legal Landscape surrounding AI Sycophancy

- US District Court’s significant observationIn February 2025, a 14 year old teenager in Florida died by suicide with her mother alleging that Character.ai was the actual cause of her son’s demise. Thereafter she filed a lawsuit against the AI Company for negligence and wrongful death of her son.[11] As per the complaint, the virtual AI character, based on the character of the popular TV series ‘Game of Thrones’ allegedly asked her son if he had devised a plan for killing himself to which he admitted to have a plan but was unsure if it would succeed or cause him great pain. The AI chatbot responded to this confusion of the teenager by stating that, “that’s not the reason not to go through with it”.[12]In May 2025, the U.S. District Court for the Middle District of Florida dismissed the motion of the AI Company claiming that the responses by Character.ai are protected by the first amendment as free speech and therefore, the claims against developers of an AI chatbot are barred by the First Amendment. To this defense, the judge denied the argument and the motion to dismiss holding that the right to free speech does not extend to AI Chatbots in the United States.[13]

- How does the EU AI Act regulate AI Sycophancy?

Article 5(1)(b) of the European Union Artificial Intelligence Act (hereinafter referred to as ‘EU AI Act’) prohibits the deployment of AI systems which exploit any of the vulnerabilities of a natural person due to their age, disability or a specific social or economic situation, with the objective, or the effect, of materially distorting the behavior of that person in a manner that causes or is reasonably likely to cause that person or another person significant harm.[14]As per Article 99(3) of the EU AI Act, non-compliance with the prohibitions under Article 5, as stated above, shall result in administrative fines of up to EUR 35,000,000 (INR 3.57 Crore) or, if the offender is an undertaking, up to 7 % of its total worldwide annual turnover for the preceding financial year, whichever is higher.[15]Additionally, Recital 27 of the EU AI Act lays down seven principles of trustworthy and ethical AI. One relevant principle that addresses the issue of sycophancy is ‘Technical Robustness and Safety’ which states that AI systems need to be resilient and secure. They need to be safe, ensuring a fall back plan in case something goes wrong, as well as being accurate, reliable and reproducible. That is the only way to ensure that also unintentional harm can be minimized and prevented.[16](To read more on the EU AI Act, refer to – https://ssrana.in/articles/eu-parliament-final-nod-landmark-artificial-intelligence-law/ )

- Analyzing the Indian Regulatory Landscape as relevant to AI Sycophancy

Presently, India does not have a comprehensive AI legislation in place like the EU AI Act. However in January, 2025, a subcommittee constituted by the Ministry of Electronics and Information Technology (hereinafter referred to as ‘MeitY’) released the AI Governance Guidelines Development Report (hereinafter referred to as ‘AI Report’) which aimed to analyze the gaps and offer recommendations for developing a comprehensive framework for governance of Artificial Intelligence in India. This AI Report listed 8 AI governance principles including safety, reliability & robustness which states that AI systems should be developed, deployed & used in a safe, reliable, and robust way so that they are resilient to risks, errors, or inconsistencies, the scope for misuse and inappropriate use is reduced, and unintended or unexpected adverse outcomes are identified and mitigated. AI systems should be regularly monitored to ensure that they operate in accordance with their specifications and perform their intended functions. This principle addresses various risks and harms including AI Sycophancy.[17] In absence of a specific and comprehensive AI regulation, certain allied laws and regulations shall apply to AI systems.- Processing of Children’s Personal Data under the Data Protection Framework

As per recent surveys, 77% of minors in India use generative AI including Chat bots with 60% of them from major cities.[18] Additionally, the incidents discussed above, on two instances, the victims of alleged AI Sycophancy were a 14 and a 16 year old teenager, hence minors. This raises a concern regarding the processing of personal information of minors by AI companies, making data protection regulations applicable. The Digital Personal Data Protection Act, 2023, (hereinafter referred to as ‘DPDPA’) prescribes additional obligations for data fiduciaries, in this case AI Companies, when collecting and processing personal information of minors. Section 9 of the DPDPA read with Rule 10 of the draft DPDP Rules mandates obtaining verifiable parental consent before collecting and processing personal information of minors. Further it also obligates the data fiduciary to not undertake such processing of personal data that is likely to cause any detrimental effect on the well-being of a child.(To read more on the DPDPA, refer to the following article on Bar & Bench by Ms. Anuradha Gandhi, Managing Associate and Ms. Rachita Thakur, Senior Associate at S.S. Rana & Co. – https://www.barandbench.com/view-point/the-digital-personal-data-protection-act-2023-a-scenario-of-arising-liabilities-2 ) - Moderation of Synthetically Generated Information

Recently, MeitY proposed in amendment to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, introducing the concept of ‘synthetically generated information’ defining it as information that is artificially or algorithmically created, generated, modified or altered using a computer resource, in a manner that appears reasonably authentic or true. The initial inference from this definition indicates that it may include responses generated by AI LLMs when user seeks genuine and authentic advice from AI Chatbots. The proposed amendment mandates labelling requirements and other significant compliances.(To read more on the proposed IT Amendment, refer to – https://ssrana.in/articles/2025-it-rules-amendment-regulating-synthetically-generated-information-in-indias-ai-and-privacy-landscape/) - Regulating Sycophancy as an AI Dark Pattern

Further, as discussed above, AI Sycophancy has a similar nature to that of dark patterns. As per clause 2(1)(d) of the Guidelines for Prevention and Regulation of Dark Patterns, 2023 (hereinafter referred to as ‘Dark Patterns Guidelines’) dark patterns are any practices or deceptive design pattern using user interface or user experience interactions on any platform that is designed to mislead or trick users to do something they originally did not intend or want to do, by subverting or impairing the consumer autonomy, decision making or choice, amounting to misleading advertisement or unfair trade practice or violation of consumer rights. Implantation and usage of dark patterns on any platforms is prohibited under the Indian Consumer Protection Law. However, currently, AI sycophancy has not been including in the Annexure of Specified Dark Patterns under the Dark Patterns Guidelines. [19](To read more on other sectoral AI regulations, refer to – https://ssrana.in/articles/the-free-ai-framework-regulating-ai-in-financial-sector/ )

- Processing of Children’s Personal Data under the Data Protection Framework

[1] https://openai.com/index/strengthening-chatgpt-responses-in-sensitive-conversations/

[3] https://www.documentcloud.org/documents/26078522-raine-vs-openai-complaint/

[5] https://openai.com/index/sycophancy-in-gpt-4o/

[6] https://www.law.georgetown.edu/tech-institute/insights/tech-brief-ai-sycophancy-openai-2/

[7] https://arxiv.org/pdf/2310.13548

[9] https://www.anybodycanprompt.com/p/youre-not-crazy-how-ai-sycophancy

[11] https://www.courtlistener.com/docket/69300919/157/garcia-v-character-technologies-inc/

[12] https://www.theguardian.com/technology/2024/oct/23/character-ai-chatbot-sewell-setzer-death

[14] https://ai-act-law.eu/article/5/

[15] https://ai-act-law.eu/article/99/

[16] https://ai-act-law.eu/recital/27/

[17] https://indiaai.s3.ap-south-1.amazonaws.com/docs/subcommittee-report-dec26.pdf

[18] https://www.ciol.com/tech/60-of-kids-in-major-indian-cities-use-genai-gateway-report-6906924